Abstract

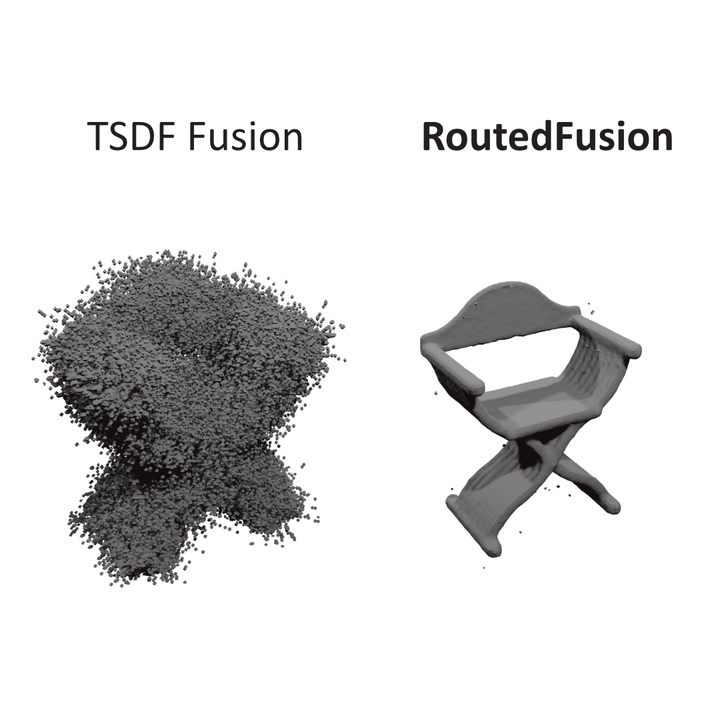

The efficient fusion of depth maps is a key part of most state-of-the-art 3D reconstruction methods. Besides requiring high accuracy, these depth fusion methods need to be scalable and real-time capable. To this end, we present a novel real-time capable machine learning-based method for depth map fusion. Similar to the seminal depth map fusion approach by Curless and Levoy, we only update a local group of voxels to ensure real-time capability. Instead of a simple linear fusion of depth information, we propose a neural network that predicts non-linear updates to better account for typical fusion errors. Our network is composed of a 2D depth routing network and a 3D depth fusion network which efficiently handle sensor-specific noise and outliers. This is especially useful for surface edges and thin objects, for which the original approach suffers from thickening artifacts. Our method outperforms the traditional fusion approach and related learned approaches on both synthetic and real data. We demonstrate the performance of our method in reconstructing fine geometric details from noise and outlier contaminated data on various scenes.

Method

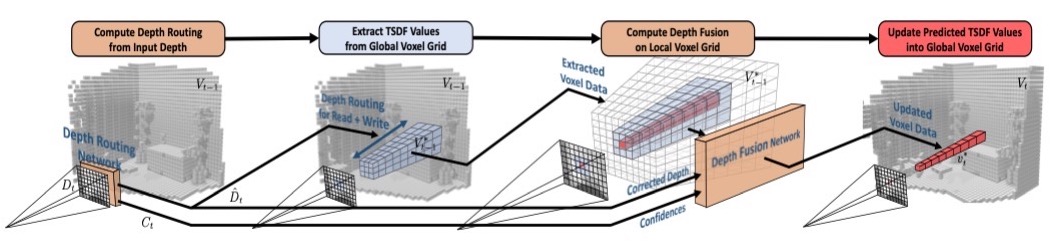

Our method consists of four key stages. Firstly, the depth routing network estimates a corrected depth map and a confidence map from a noisy and outlier contaminated depth map. The corrected depth map guides the extraction of a canonical TSDF volume from the global TSDF volume. The canonical volume is passed together with the corrected depth map and the confidence map through the depth fusion network. This depth fusion network predicts optimal updates for the local canonical TSDF volume given its old state and the new measurement. Finally, the updated canonical TSDF volume is integrated back into the global TSDF volume.

|

Results

We evaluate our method on synthetic as well as real-world data. We compare the results to several existing state-of-the-art and baseline methods.

Synthetic Data

In order to compare our method to existing learning-based as well as handcrafted method, we evaluate the performance in fusing synthetic depth maps from the ShapeNet dataset that is augmented with an artificial depth-dependent noise distribution.

|  |  |  |  |

|  |  |  |  |

|  |  |  |  |

|  |  |  |  |

We quantitatively and qualitatively show that our method outperforms existing depth fusion and 3D representation methods. Especially, our method generalizes significantly better to unseen shapes than existing learning-based methods.

| Method | MSE [e-05] | MAD | Acc. [%] | IoU. [0, 1] |

|---|---|---|---|---|

We also investigate the robustness of our method to higher input noise levels. Therefore, we augment the input depth maps with different noise levels and fuse them using standard TSDF Fusion as well as our RoutedFusion.

|  |  | |

|  |  | |

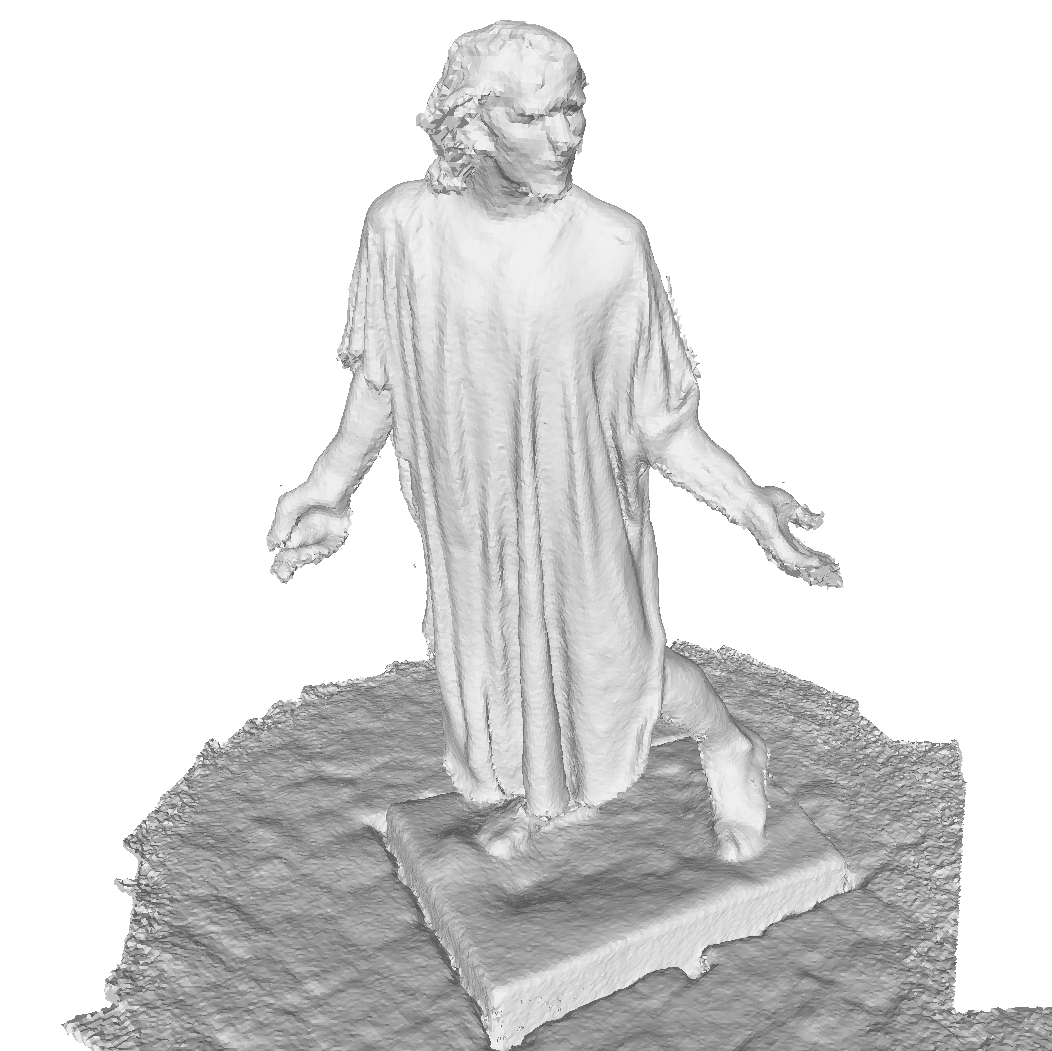

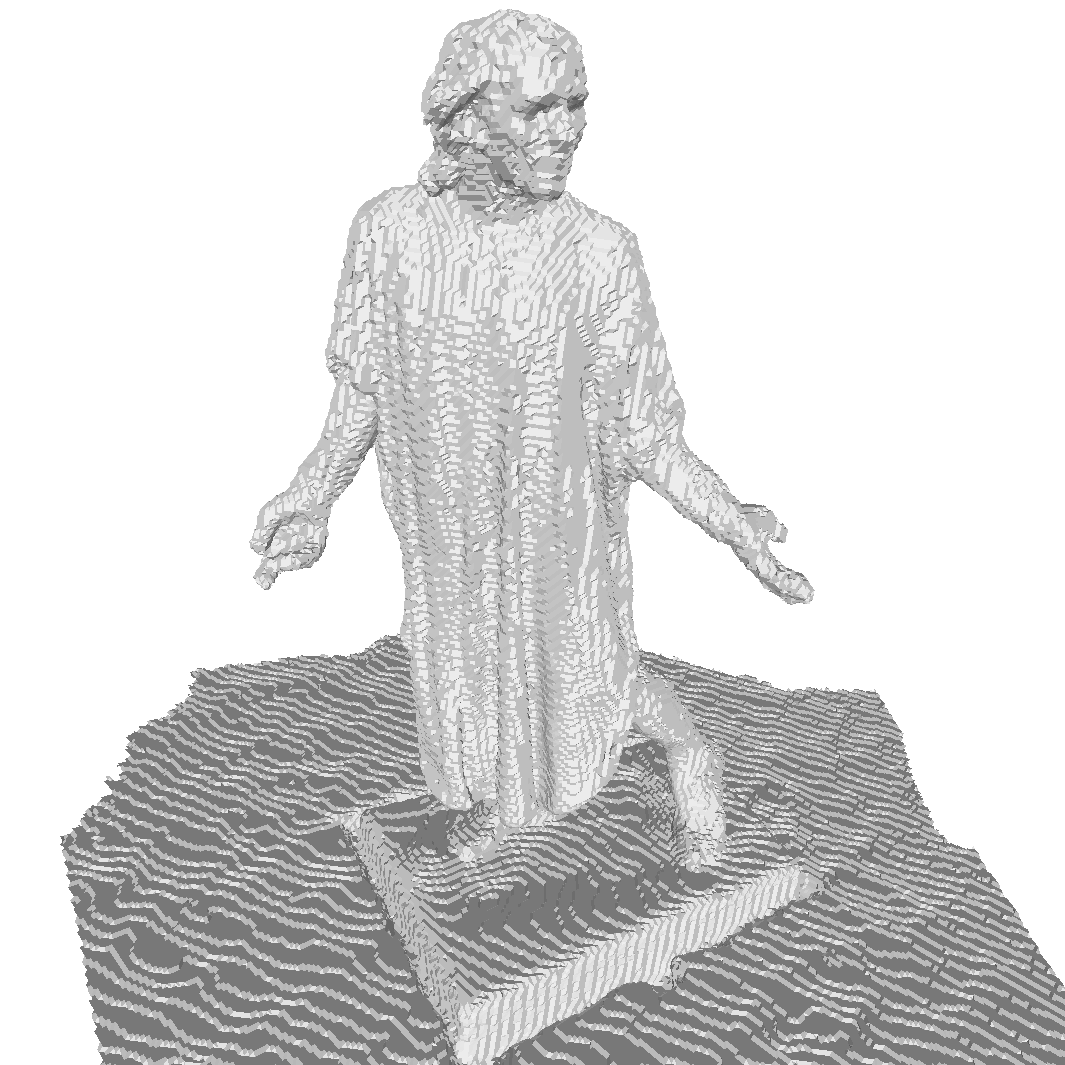

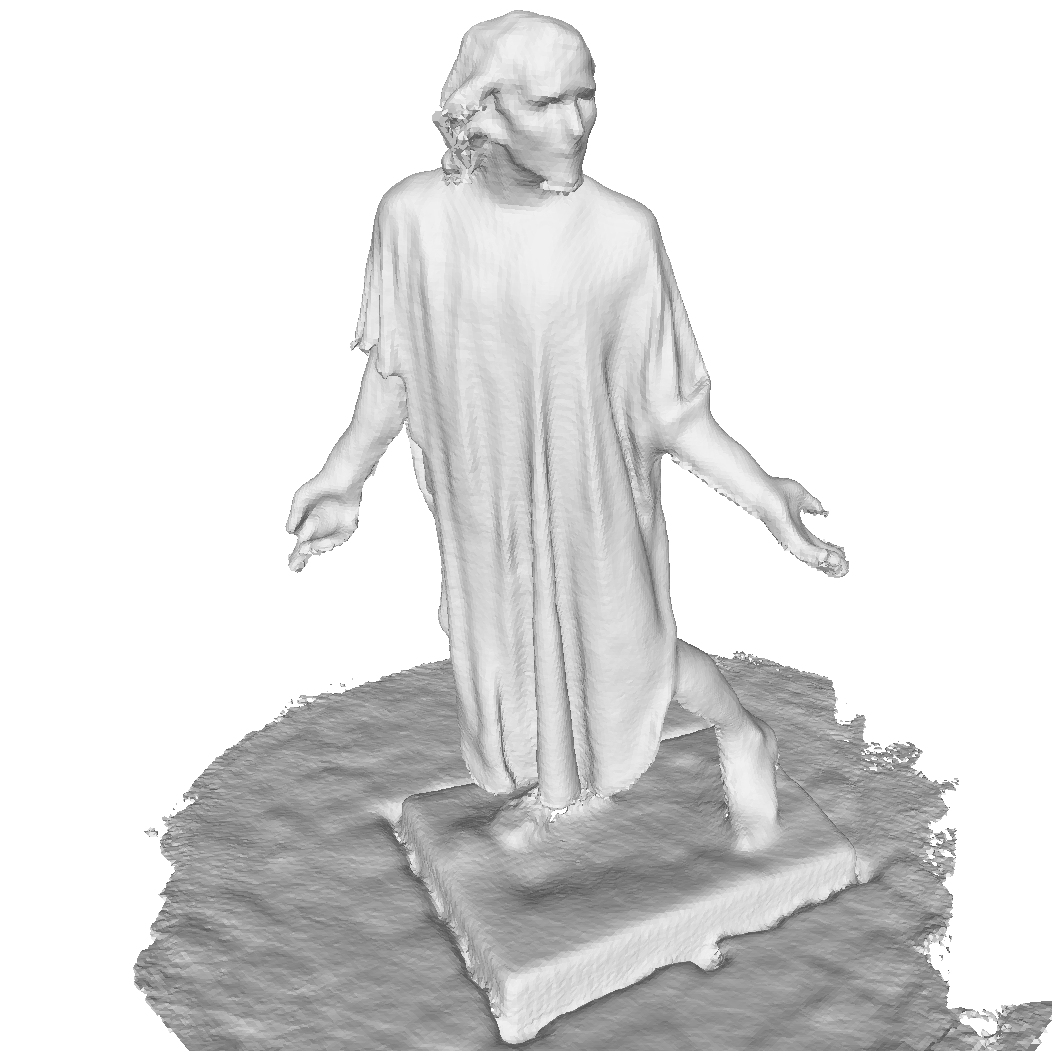

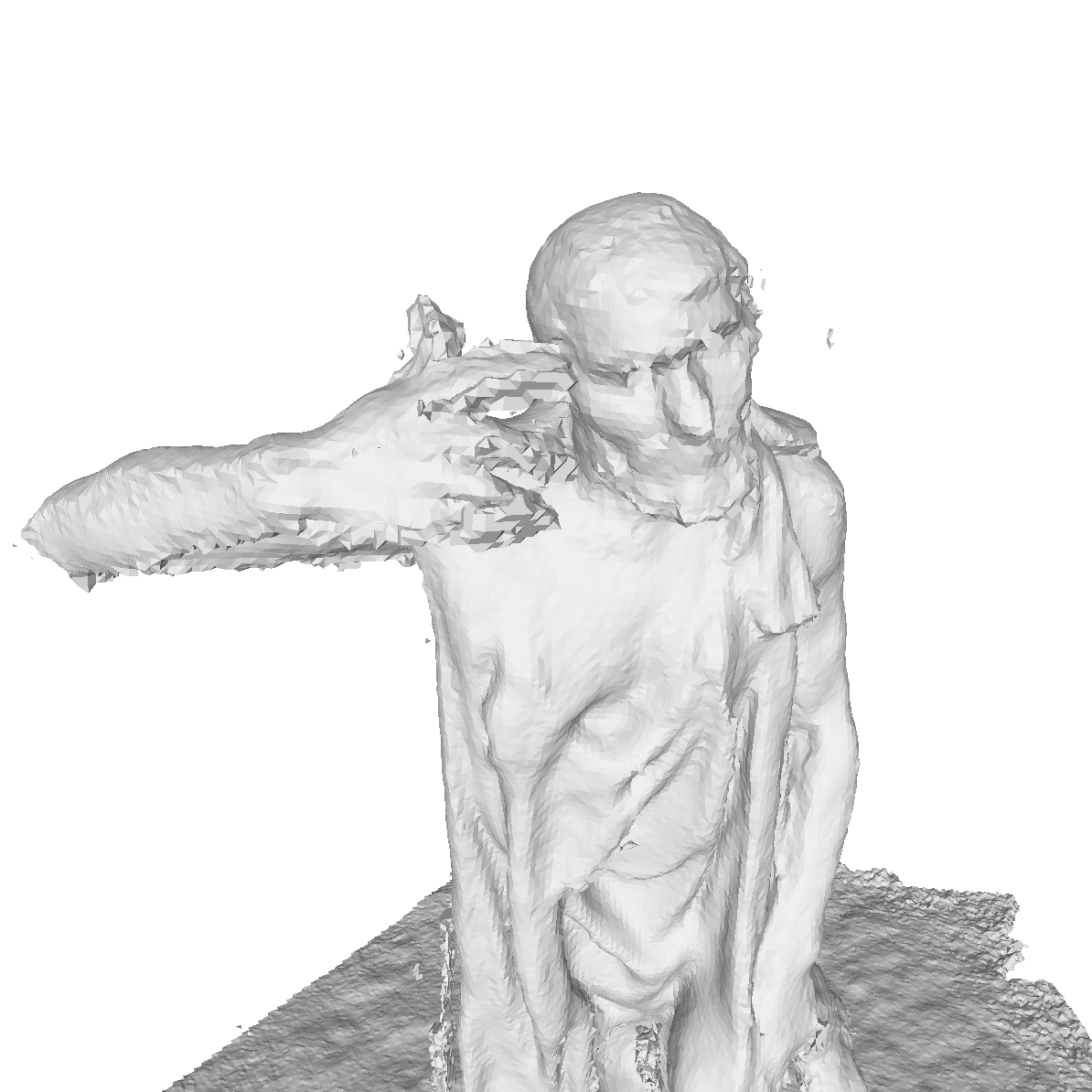

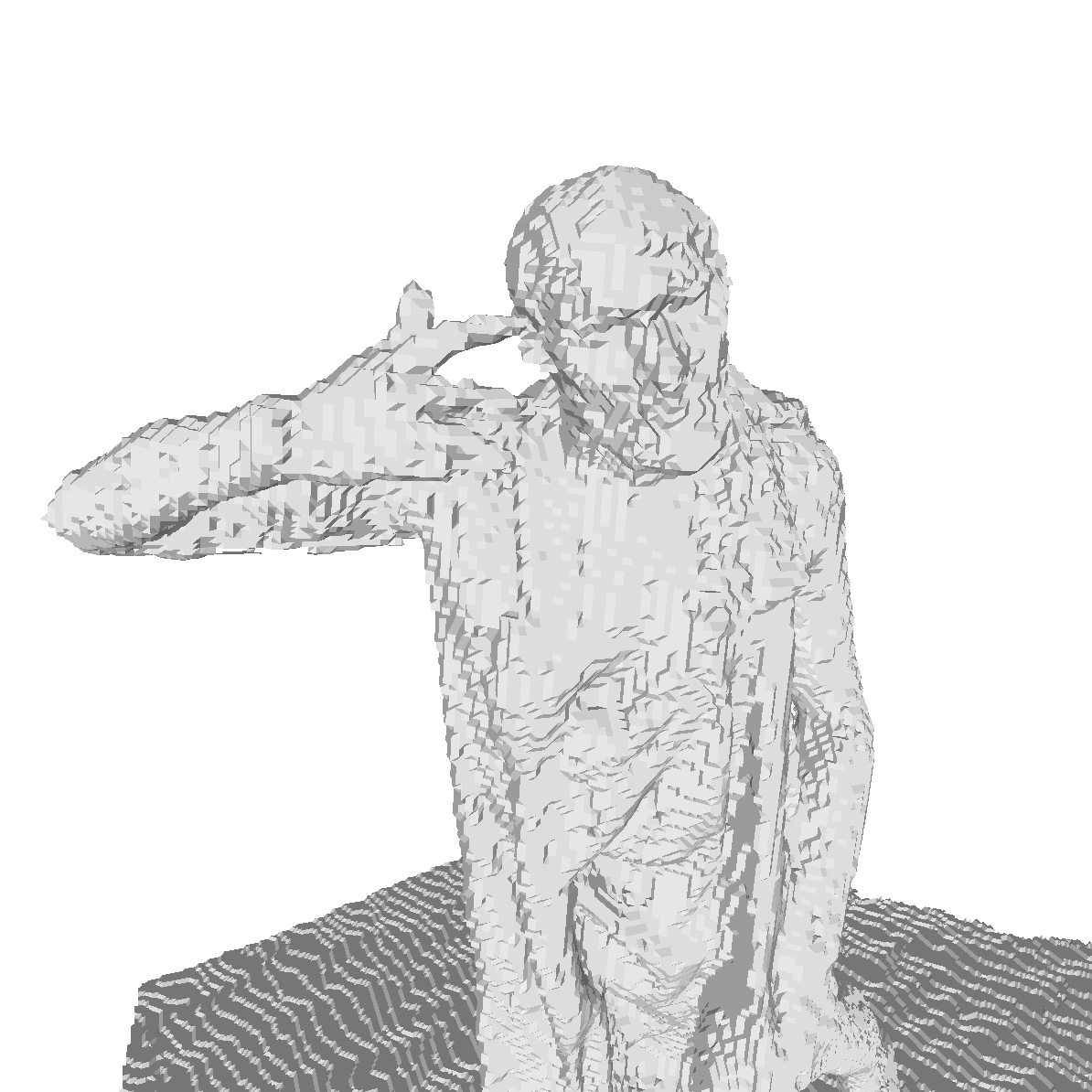

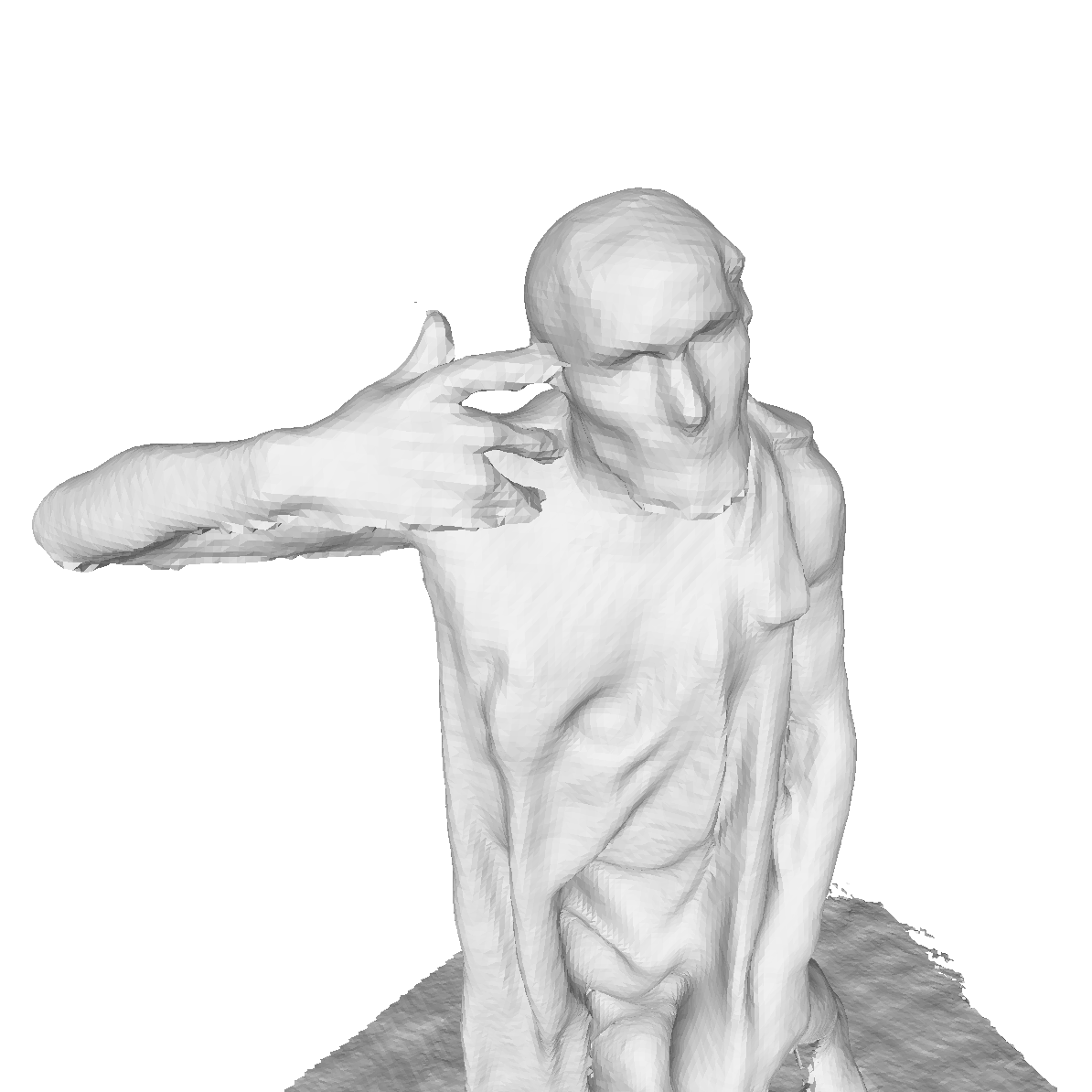

Real-World Data

We also run our pipeline on real-world data. We evaluate our pipeline on the Scene3D dataset. We show that our method compares favourably to existing depth fusion methods.

|  |  |

|  |  |

Conclusion

With RoutedFusion, we propose a real-time, learning-based depth map fusion method. This method allows for better fusion of imperfect depth maps and reconstruction of 3D geometry from range imagery in real-world applications. Although only very little training data is required to train RoutedFusion, we show that compact neural networks can be effectively used for real-world applications without the risk of overfitting. This allows for easy transfer to new sensors and scenarios, where only little training data is available.